The organization I work for is fortunate, very fortunate indeed to have a grant from the USDOE as part of its Arts Education Model Development and Dissemination (AEMDD) program.

It is near impossible to be awarded one of these highly competitive grants unless you have a quasi-experimental research design as part of the overall project design. Essentially what makes it a quasi-experimental design is that it lacks a randomized control. It does have a control group (otherwise it would be a non-experimental design), and the common lens of research across all of these USDOE AEMDD grants is standardized test scores in ELA and math.

The USDOE is particularly interested in the question of how the project or let’s use the term “treatment” will affect the ELA and math test scores for those students who participate versus students of similar demographics that do not.

Today more than ever, using the state ELA and math tests raises a very complicated question that you might not have dealt with or considered a few years ago. It is provoked by the test scores having risen dramatically across New York State over the past couple of years, in nearly every school district regardless of the approach of the individual district.

So, you’ve got scores catapulting across the State of New York, no matter what the treatment, reform, intervention, and here we are trying to measure our program using these very same test scores.

Yes, of course, the research will still report out on the differences between students in the program and those who are not. So, what’s the big deal you might ask?

But wait, consider this: the gold standard of ELA and math assessment, the NAEP scores, are at odds with these increases. And there’s even more, including a fair number of people in education who are either reporting or suspecting an increase in cheating, or scores being changed by educators as an outgrowth of the increasing stakes associated with these tests.

Do you see a problem?

No? Yes? Maybe?

All this has led many to question the validity of these tests. The Chancellor of the New York State Board of Regents, Merryl Tisch, recently addressed this issue by saying that the tests would be revised to make them less predictable.

So, you might have thought this post would be the usual jeremiad against standardized testing leading to a narrowing of the curriculum. Nope, this is an altogether different twist, essentially centered in fundamental questions about the validity of research components that are based on these test scores.

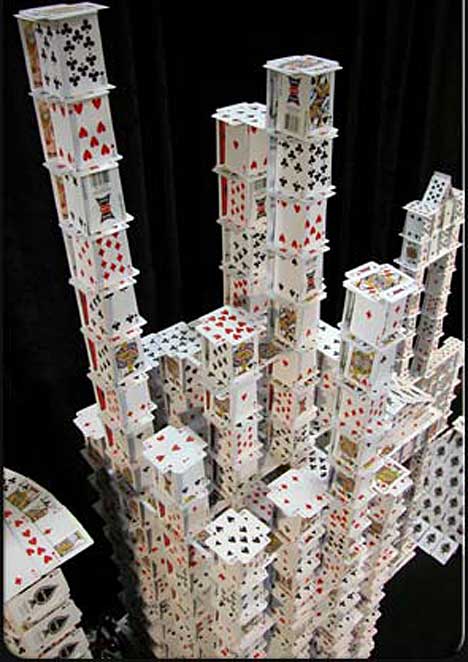

Now, to be fair, we are looking at a host of other issues, both qualitative and quantitative. But, when considerable questions are being raised about the standardized tests themselves, it positions whatever research you might be doing on the effects of your program on ELA and math tests to prospectively be an even bigger house of cards than ever before.

good twist on the usual, as you said– thanks. So what else are you doing to document the effects of your program? We- in another AEMDD program- are struggling with documentation, and with changes(such as with your state’s testing) in just about every component of our program. 2010-11 is our final year, and I’m hoping to compile a narrative (profiles of teachers, artists, etc.) to accompany the data and lesson plans, because early on I gave up on the benefits of our work manifesting in test scores (because of said changes and many other outside factors) and because it’s important to relay the best part of this whole experience– what happens in the classroom.

another Q- in what ways is your arts organization fortunate to have a AEMDD program?

How unfortunate that you mention NAEP in your article. There were not enough schools/districts that even offered arts courses/classes taught by licensed professional arts teachers that allowed ‘testing’ in areas of the arts that were previously tested.

My concern is that we educators should be advocating for alternative forms of performance-based assessment for ALL subjects in the curriculum BUT states and the federal government through NCLB have determined that ‘standardized tests’ are the gold standard.

I agree with your analysis of state-wide tests and their relationship to validity/reliability coefficients. I actually contacted the test makers in Ohio when the tests were being developed and asked for the numbers. At that time, they did not have them. They have since established the reliability and validity of the tests. It was the best available at the time.

So, what does one do? Look at the treatment. Compare what the scores are against two groups who differ only in what their treatment is to a third group that receives no treatment. This is what was done with SPECTRA+ using a modified control group, thanks to Dr. Richard Luftig, formerly of Miami University, Ohio.

There are other methodologies that are available to researchers including strong ethnographic and phenomenological designs.

Perhaps we need to step out of our boxes and re-think what is research? Quasi or random– we are telling the story, painting the picture, composing the film from the frames of a child’s experience in order to demonstrate to others what we know to be true– the arts do make a difference in all our lives.

Hi Jackie.

Well, the NAEP issue is not exactly correct. There was not enough dance and theater instruction to produce the sample size they were looking for. That was not true for music and visual arts at the eighth grade level–the grade that the NAEP arts was concerned with.

I do think there is growing interest in refining/improving state assessments. How jmuch and where the interest is coming from, remains to be seen.

Well, the funding, the imprimatur, etc., made possible an expansion of an approach we were deeply committed to. A million dollars over four years is a terrific grant. We’re eager to apply again…

Well, the beautiful thing about the AEMDD program is that while they are interested in ELA and math scores on a universal basis across all the different programs they fund, they support the development of project specific goals too. So, we’ve got a very good broad based qualitative assessment running at the same time looking at a variety of indicators related to what the program is really about, and well beyond the improvement of test scores in ELA and Math.

The final year must be exciting as it gives you the chance to present your findings to the field. Congratulations!